四、Logstash

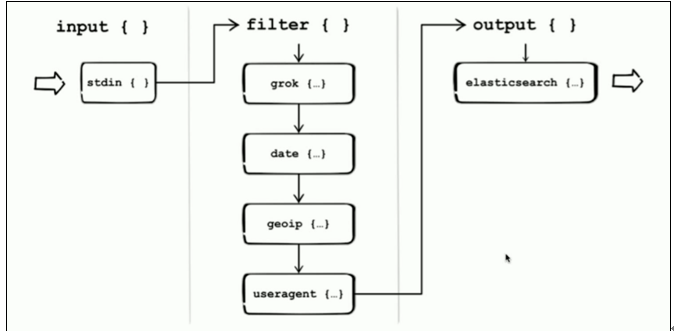

4.1、logstsh架构

搜集---》过滤---》处理

Grok:匹配需要收集的字段信息

Date:处理日期类型

Geoip:添加地理位置信息

Useragent:提取请求用户信息

4.2、logstash安装

[root@localhost logstash]# tar -zxvf logstash-6.3.1.tar.gz

mkdir config

[root@localhost logstash]# vi test.conf

input {

stdin { }

}

output {

stdout {codec=>”rubydebug”}

}

[root@localhost logstash-6.3.1]# ./bin/logstash -f config/test.conf

4.3、logstsh操作

[root@localhost logstash-6.3.1]# vi test.conf

input {

stdin {codec=>line}

}

output {

stdout {codec=>json}

}

[root@localhost logstash-6.3.1]# echo “foo

bar”./bin/logstash -f config/test.conf

4.4、logstsh input插件

l stdin

输入插件:可以管道输入,也可以从终端交互输入

通用配置:

codec:类型为codec

type:类型为string自定义该事件类型,可用于后续判断

tags:类型为array,自定义事件的tag,可用于后续判断

add_field:类型为hash,为该事件添加字段

input{

stdin{

codec => “plain”

tags => [“test”]

type => “std”

add_field => {“key”=>”value”}

}

}

output{

stdout{

codec => “rubydebug”

}

}

[root@localhost logstash-6.3.1]# echo “test”|./bin/logstash -f config/test.conf

{

"@version" => "1",

"key" => "value",

"message" => "test",

"type" => "std",

"tags" => [

[0] "test"

],

"host" => "localhost",

"@timestamp" => 2019-03-24T12:20:16.334Z

}

l file

从文件读取数据,如常见的日志文件

配置:

path => [“/var/log/**/*.log”,”/var/log/message”] 文件位置

exclude => “*.gz” 不读取哪些文件

sincedb_path => “/var/log/message” 记录sincedb文件路径

start_position => “beginning” 或者”end” 是否从头读取文件

stat_interval => 1000 单位秒,定时检查文件是否有更新,默认1S

input {

file {

path => ["/home/elk/logstsh/config/nginx_logs"]

start_position => "beginning"

type => "web"

}

}

output {

stdout {

codec => "rubydebug"

}

}

{

"path" => "/home/elk/logstsh/config/nginx_logs",

"message" => "79.136.114.202 - - [04/Jun/2015:07:06:35 +0000] \"GET /downloads/product_1 HTTP/1.1\" 404 334 \"-\" \"Debian APT-HTTP/1.3 (0.8.16~exp12ubuntu10.22)\"",

"@timestamp" => 2019-03-24T12:47:20.900Z,

"host" => "localhost",

"type" => "web",

"@version" => "1"

}

l Elasticsearch

input {

elasticsearch {

hosts => "192.168.14.10"

index => "atguigu"

query => '{ "query": { "match_all": {} }}'

}

}

output {

stdout {

codec => "rubydebug"

}

}

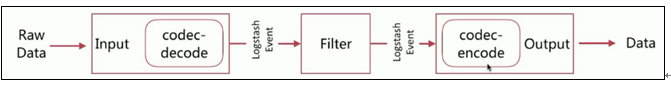

4.5、logstsh filter

Filter是logstsh功能强大的原因,它可以对数据进行丰富的处理,比如解析数据、删除字段、类型转换等

date:日期解析

grok:正则匹配解析

dissect:分割符解析

mutate:对字段作处理,比如重命名、删除、替换等

json:按照json解析字段内容到指定字段中

geoip:增加地理位置数据

ruby:利用ruby代码来动态修改logstsh Event

input {

stdin {codec => “json”}

}

filter {

date {

match => ["logdate","MM dd yyyy HH:mm:ss"]

}

}

output {

stdout {

codec => "rubydebug"

}

}

./logstash -f ../../config/test.conf

{“logdate”:”Jan 01 2018 12:02:08”}

l Grok

正则匹配

%{IPORHOST:clientip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] “%{WORD:verb} %{DATA:request} HTTP/%{NUMBER:httpversion}” %{NUMBER:response:int} (?:-|%{NUMBER:bytes:int}) %{QS:referrer} %{QS:agent}

input {

http {port => 7474}

}

filter {

grok {

match => {

"message" => "%{IPORHOST:clientip} %{USER:ident} %{USER:auth} \[%{HTTPDATE:timestamp}\] “%{WORD:verb} %{DATA:request} HTTP/%{NUMBER:httpversion}” %{NUMBER:response:int} (?:-|%{NUMBER:bytes:int}) %{QS:referrer} %{QS:agent}"

}

}

}

output {

stdout {

codec => "rubydebug"

}

}

93.180.71.3 - - [17/May/2015:08:05:32 +0000] "GET /downloads/product_1 HTTP/1.1" 304 0 "-" "Debian APT-HTTP/1.3 (0.8.16~exp12ubuntu10.21)"

93.180.71.3 - - [17/May/2015:08:05:23 +0000] "GET /downloads/product_1 HTTP/1.1" 304 0 "-" "Debian APT-HTTP/1.3 (0.8.16~exp12ubuntu10.21)"

l Logstsh ouput

stdout

file :

file {

path => “/var/log/web.log”

codec => line {format => “%{message}”}

}

elasticsearch :

elasticsearch {

hosts => ["http://192.168.14.10:9200"]

index => "logstash-%{type}-%{+YYYY.MM.dd}"

}